Exploring the Sling Feature Model: Part 2 - Composite NodeStore

Published on by Dan Klco

In my previous post Exploring the Sling Feature Model, I described the process of migrating from a Sling Provisioning project setup to a Sling Feature Model project.

Now that we have the Sling Provisioning Model project converted, we can move on to the fun stuff and create a Composite NodeStore. We'll use Docker to build the Composite Node Store in the container image.

Creating the Composite NodeStore Seed

The Composite NodeStore works by combining one or more static "secondary" node stores with a mutable primary NodeStore. In the case of AEM as a Cloud Service, the /apps and /libs directories are mounted as a secondary SegmentStore, while the remainder of the repository is mounted as a MongoDB-backed Document Store.

For our simplified example, we will create a secondary static SegmentStore for /apps and /libs and combine that with a primary SegmentStore for the remainder of the repository. Since the secondary SegmentStore will be read-only, we must "seed" the repository to pre-create the static paths /apps and /libs.

To do this, we have a feature specifically to seed the repository with the /apps and /libs temporarily mutable. We can then use the aggregate-features goal of the Sling Feature Maven Plugin to combine this with the primary Feature Model to create a feature slingcms-composite-seed. When we start a Sling instance using this feature, it will create the nodes under these paths based on the feature contents.

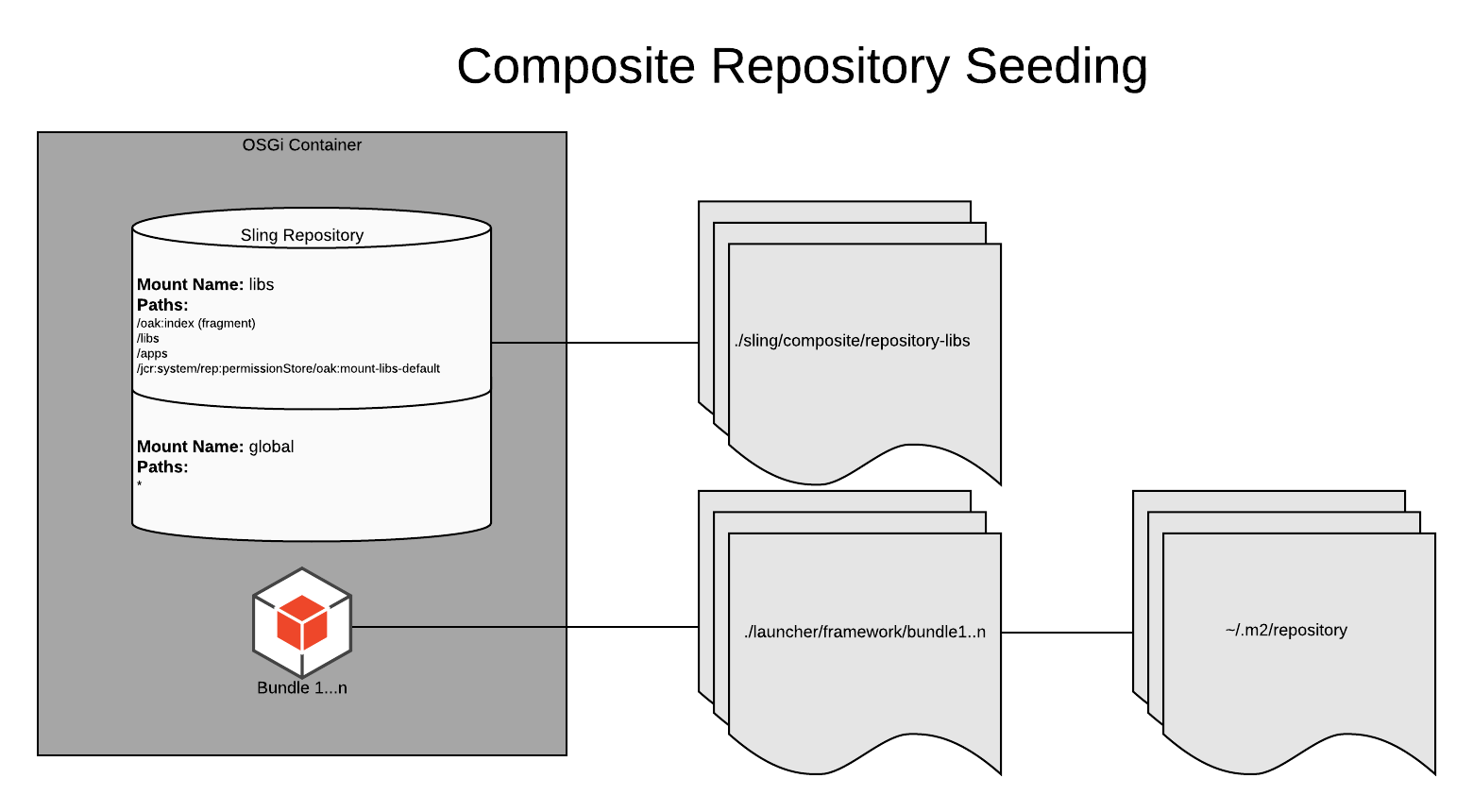

As shown below, while seeding the repository is written to the libs SegmentStore. It's also worth mentioning that with the Feature Model Launcher, by default, the OSGi Framework runs in a completely different directory from the repository and pulls the bundle JARs from the local Maven repository.

Our updated Dockerfile runs the following steps to build the container image:

- Downloads the Feature Model Launcher JAR and Feature Model JSON files

- Starts the Sling instance using the slingcms-composite-seed model in the background

- Polls the Felix Health Checks until the tag "systemalive" returns 200

- Once the 200 status is returned the Sling instance is stopped and the build cleans up the launcher and symlinks the SegmentStore directory into the expected path

Naming Gaffes

Hopefully, you are more careful than me, but one thing to keep in mind is that the Sling Feature Launcher will happily start as long as it has a valid model. For example, you can easily spend a significant amount of time trying to understand why nothing responds with this model:

org.apache.sling.cms.feature-0.16.3-SNAPSHOT-composite-seed.slingosgifeature

Instead of the one I meant:

org.apache.sling.cms.feature-0.16.3-SNAPSHOT-slingcms-composite-seed.slingosgifeature

Since the non-aggregate model is a valid model, the Sling Feature Launcher will happily start, but it simply creates an OSGi container with only a couple of configuration which naturally does... nothing.

Starting and Running

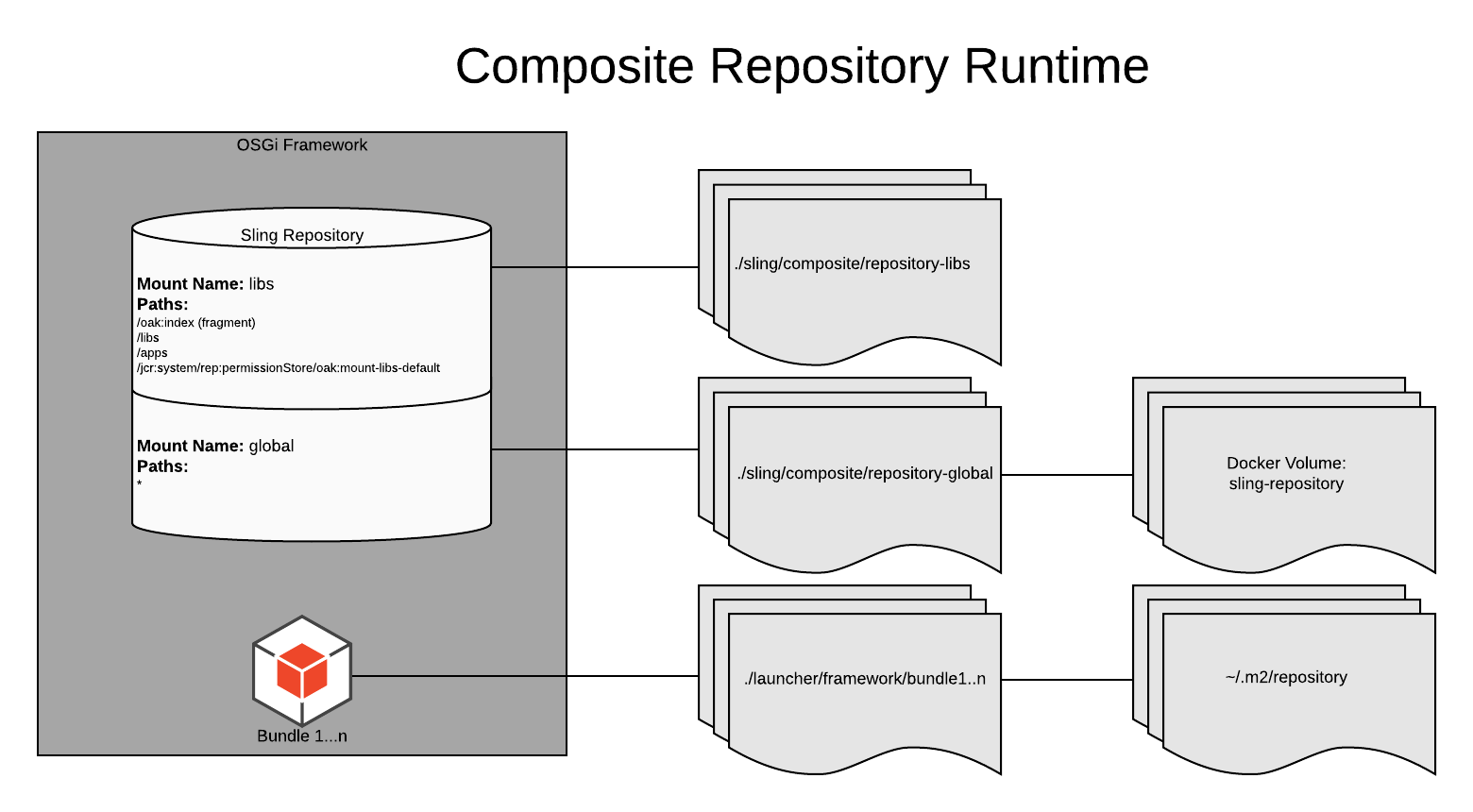

Once the repository is been fully started and seeded, we'll run a different Feature Model to run the instance. Similar to the Composite Seed Feature Model, the slingcms-composite-runtime Composite Model will use the composite repository, however it runs the libs mount in readonly mode.

To use the runtime Feature Model, the CMD directive in the Dockerfile calls the Sling Feature Model Launcher with the slingcms-composite-runtime Feature Model. In addition, we'll mount a volume in the docker-compose.yml to separate the mutable volume out from the container disk, that way the repository persists between restarts and container deletion.

While in runtime mode, the Composite repository looks like the diagram below, leveraging a Docker volume for the global SegmentStore and the local seeded repository for the libs SegmentStore:

End to End

Here's a quick video showing the process of creating a Container-ized version of Sling CMS with a Composite NodeStore from end to end.

Details: Build Arguments & Dependencies

The current example implementation uses Apache Maven to pull down the Feature Models with a custom settings.xml and Build Arguments in the Dockerfile. By changing the settings.xml and the Build Arguments, you could override the Feature Model being produced to use a custom Feature Model, for example an aggregate of Sling CMS and your custom Sling CMS app.

We'll cover the process of producing a custom aggregate in the next blog post in the Exploring the Sling Feature Model series. If you'd like to learn more about the Sling Feature Model, you should check out my previous post on Converting Provisioning Models to Feature Models.